What’s is the topic?

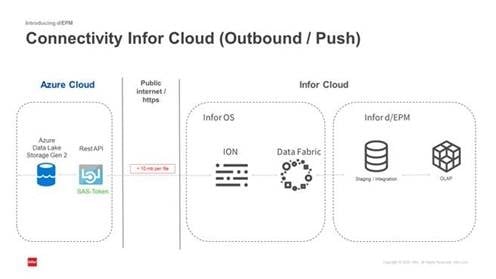

I would like to build a process which is sending data (on-demand / or once a day) from Infor d/EPM to an Azure Cloud Storage Service (as Blob).

What is causing problems?

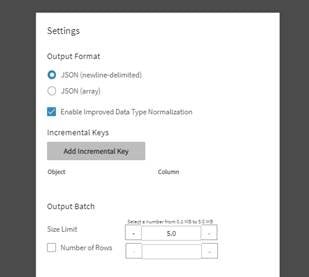

I’m fighting with the OS Limits – if I got it right, the ION API will only support data chunks of max 5 MB/10MB (or a little bit more with another license). But the data export should cover data sets of >500k-1000k entries (e.g. 10 columns) – this will result in more than this limit.

What I’ve tried already?

I was using the Azure Cloud Storage Service REST API (Put Blob) to send data – works fine, for a small set of records.

To manage bigger amount of data I was trying to use a data flow query, configure an max. output batch (e.g. 5 MB) => but then I’m only getting the rows of the last batch as it seems that the data is overwritten by the Put BlockBlob each time.

My question?

Is there any recommended way how to come over this problem? How could I split the data chunks and send them step by step appending to the data target? Have you done something similar already, esp. by using an Azure Cloud as a data target?